Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Orchestrating and Hosting Large Language Models in Cloud Environments

Meta Summary: Delve into the complexities of deploying large language models (LLMs) in cloud environments. Discover how orchestration, containerization, GPU strategies, load balancing, and monitoring form the backbone of successful LLM deployment while maintaining high availability and performance.

In the rapidly evolving landscape of cloud computing, the effective deployment and management of large language models (LLMs) pose significant challenges. These models, powering applications from natural language processing to complex data analysis, require sophisticated strategies to maximize their potential. This article explores orchestrating and hosting LLMs in cloud environments, offering insights into best practices, common pitfalls, and real-world applications.

Introduction to Orchestration and Hosting in the Cloud

Hosting large language models in cloud environments presents unique challenges due to their size and computational demands. Orchestration automates the arrangement, coordination, and management of complex systems and services, ensuring efficient deployment and management of LLMs.

Role of Orchestration in Cloud Deployment

Orchestration is vital for seamless scaling and resource allocation, crucial for maintaining the performance and availability of LLMs. Given their complexity, LLMs demand robust orchestration frameworks that support dynamic scaling and resource optimization.

Note: Orchestration can significantly improve system efficiency, often being the linchpin in high-performance cloud deployments.

Enhancing LLM Deployment through Containerization Techniques

Containerization involves encapsulating applications and dependencies into a singular container, streamlining deployment—a significant advantage for LLM deployment across diverse environments.

Leveraging Docker and Kubernetes

By utilizing tools like Docker, LLM deployment becomes lightweight and portable. Combined with Kubernetes, an open-source container orchestration platform, organizations can enhance scalability and manageability.

Real-World Impact of Containerization

A major tech company deployed its LLMs using Kubernetes, attaining a 40% increase in resource utilization and reducing deployment times dramatically.

Tip: Regularly update containers to incorporate the latest security patches for enhanced security and stability.

Streamlining Performance with GPU Orchestration Strategies

GPUs (Graphics Processing Units) are increasingly harnessed for machine learning tasks due to their prowess in fast data processing. Efficient GPU resource management is crucial for optimizing LLM performance.

Tools for Effective GPU Resource Management

Utilize platforms such as NVIDIA GPU Cloud (NGC), which provides dynamic allocation of GPU resources based on workload demands—cutting costs and improving efficiency.

Real-World Case Study

An AI startup optimized LLM training cycles by 50% using NVIDIA’s GPU cloud, highlighting cost-effective performance enhancement through strategic GPU orchestration.

Note: Implement auto-scaling for GPUs to adapt resource usage based on real-time demands, enhancing both efficiency and cost-effectiveness.

Maintaining Service Reliability through Load Balancing

Load Balancing effectively distributes workloads across multiple computing resources, ensuring the availability and reliability of LLMs even under high demand.

Implementing Load Balancing with AWS

Utilize AWS Elastic Load Balancer to evenly distribute incoming traffic, preventing bottlenecks and maintaining optimal performance. Simulated load testing can further assess configuration effectiveness.

Best Practices and Considerations

Regularly test load balancing setups to prevent single points of failure and align configurations with evolving traffic patterns.

Tip: Monitoring tools are indispensable for ongoing load-balancing performance evaluation, enabling timely adjustments to enhance reliability.

Ensuring High Availability in Cloud Environments

High Availability is designing systems that remain operational with minimal downtime, a critical requirement for meeting user and business expectations in LLM deployments.

Strategies for Uptime and Fault Tolerance

Leverage multi-region deployments and automated failover processes to ensure seamless operation, even amid failures. Disaster recovery planning and proactive testing are critical for resilience.

Tip: Always maintain up-to-date backups—these safeguard data integrity during outages.

Continuous Monitoring and Proactive Maintenance

Ongoing monitoring and maintenance are key to sustaining LLM performance and health. Implement tools like Prometheus and Grafana for comprehensive monitoring solutions.

Best Practices for Monitoring

Establish extensive logging and alert systems to identify and resolve issues promptly. Regular performance review helps anticipate and mitigate potential bottlenecks.

Avoid Common Pitfalls

Falling behind on monitoring configuration updates can result in missed alerts—stay proactive to keep systems running smoothly.

Note: Effective log management prevents data overflow, ensuring efficient operations.

Real-World Use Cases and Applications

Large language models hold transformative potential across industries, offering innovation and business value by enhancing customer interactions and deriving data-driven insights.

Industry-Specific Applications

From healthcare to finance, LLMs automate customer service and deliver innovative solutions, enabling companies to remain competitive in a data-driven landscape.

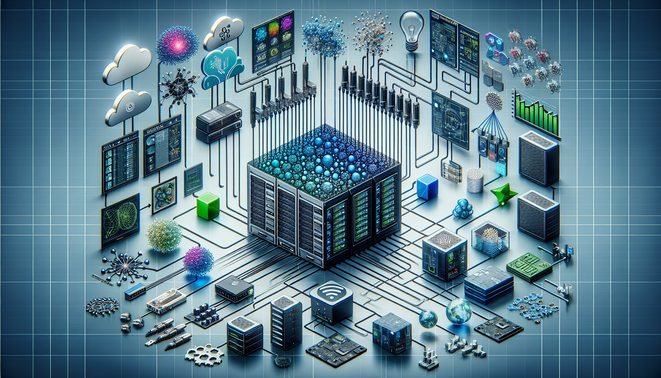

Visual Aids Suggestions

Architecture diagram showcasing LLM deployment in a containerized environment with orchestration layers.

Flowchart illustrating the load balancing process and high availability setup for LLM services.

Key Takeaways

Effective orchestration and hosting strategies are pivotal in managing LLM deployments in the cloud.

Containerization and GPU orchestration are instrumental in optimizing resources and performance.

Load balancing and high availability strategies sustain consistent uptime and reliability.

Continuous monitoring and maintenance underpin the long-term success of LLM deployments.

LLMs offer disruptive potential across multiple industries, fueling innovation and competitive advantage.

Glossary

Orchestration: Automates arrangement, coordination, and management of complex systems.

Containerization: Encapsulation of an application within a container for streamlined deployment.

GPU: Graphics Processing Unit used to accelerate computing tasks, especially in AI.

Load Balancing: Distributes workloads to enhance system efficiency and reliability.

High Availability: System design ensuring minimal operational downtime.

Knowledge Check

What is the purpose of orchestration in cloud services?

A) To manually arrange computer systems and services.

B) To automate the arrangement, coordination, and management of complex systems.

C) To simplify the deployment of single applications.

D) To reduce the need for cloud resources.

Explain how GPU orchestration can improve LLM performance.

Further Reading

Using Containers with Machine Learning

AWS Blog on SageMaker

Hyperparameter Tuning with ArviZ