Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Building Scalable Infrastructure for Large Language Models

Meta Summary: Discover how to build scalable infrastructure for large language models (LLMs). Learn about essential components like compute resources, storage solutions, and networking. Understand hosting options, resource allocation strategies, vector databases, latency optimization, cost management, and best practices for successful implementation.

Introduction to Scalable Infrastructure for LLMs

Introduction Overview

Large Language Models (LLMs) are transforming industries through advanced natural language processing (NLP). However, deploying these models demands scalable infrastructure to efficiently manage their significant computational needs. This section covers the challenges and components of a scalable infrastructure essential for meeting the dynamic demands of LLMs.

Technical Explanation of LLM Infrastructure

Hosting LLMs involves considerable challenges due to their complexity and size. Key infrastructure demands include:

Compute Resources: High-performance CPUs and GPUs are essential for the intense processing power required.

Storage Solutions: Efficient storage systems are needed to provide rapid access to large datasets essential for training and inference.

Networking: High bandwidth and low-latency connections are crucial to enable seamless interaction between components.

These core components ensure that infrastructures not only satisfy current requirements but are also adaptable for future needs.

Fundamentals of Large Language Model Hosting

Cloud Architecture Essentials

The architecture within cloud computing plays a pivotal role in hosting LLMs. Various hosting strategies impact performance, cost, and scalability differently. This section examines cloud solution architectures, weighing the benefits and drawbacks of each hosting model.

Technical Discussion of Hosting Strategies

The hosting of LLMs in the cloud encompasses multiple architecture layers:

Frontend Interfaces: The user-facing layer, facilitating interaction with LLM applications.

Backend Services: Where data processing and model deployment occur.

Data Management Systems: Key to managing data ingestion, processing, and storage.

Hosting Options

Dedicated Hosting: Offers peak resource control but at a higher cost and with limited flexibility.

Shared Hosting: Cost-effective but may suffer from resource contention.

Containerized Solutions: Using containers like Docker enables scalable, portable deployments across various environments.

Evaluating these options involves assessing factors such as cost-effectiveness, dynamic scaling capabilities, and performance metrics.

Efficient Resource Allocation Strategies

Optimizing Resource Use

Efficient resource allocation is vital in cloud environments to optimize both performance and costs. This section explores dynamic resource management strategies, including autoscaling, which adjusts resources according to demand.

In-Depth Look at Resource Management

Resource allocation encompasses distributing computational assets such as CPU, memory, and storage. Effective allocation ensures no excess usage or shortages:

Autoscaling: Dynamically adjusts resource availability based on demand metrics like CPU load and traffic volume.

Load Balancing: Evenly distributes incoming requests across servers to avoid overloading any single unit.

Exercise: Configure autoscaling using cloud resource management tools, and design models to predict future allocation expenses.

Integrating Vector Databases for Enhanced Performance

Boosting LLM Performance with Vector Databases

Vector databases are critical in enhancing LLM performance due to their capability of efficiently storing and retrieving high-dimensional data. This section discusses their role in LLM inference and explores methods for seamless integration.

Technical Insight into Vector Databases

Vector databases optimize management of high-dimensional vectors, crucial for language embeddings, by:

Enabling Fast Retrieval: Quickly finding vectors nearest to a query, reducing time in AI processes.

Ensuring Scalability: Supporting extensive data sizes without degrading performance.

Integration often links LLM outputs to vector databases, allowing real-time data updates and quicker retrievals, boosting responsiveness.

Latency Optimization Techniques

Reducing Delays in AI Applications

Minimizing latency is essential for timely AI application responses. This section identifies latency sources and presents strategies to cut down response times in LLM hosting.

Detailed Examination of Latency Causes

Latency is the elapsed time between a request and its response, affected by:

Network Delays: Resulting from data travel times.

Processing Delays: Time taken by servers to process data.

Data Retrieval Delays: Time spent accessing database-stored data.

Techniques for Latency Reduction

Caching: Saves frequently used data in memory for quicker access.

Edge Computing: Processes data closer to users or data sources to lower network delays.

Exercise: Implement caching for frequently accessed models and adjust infrastructure based on latency analysis.

Cost Management for Cloud Services

Controlling Cloud Costs

Effective cost management ensures that cloud services remain efficient without exceeding budget limits. This section outlines cloud service cost structures and strategies for cost savings without sacrificing performance.

Detailed Breakdown of Cost Strategies

Cloud costs consist of compute, storage, and data transfer expenses. Key strategies for managing costs include:

Right-Sizing: Matching resources to actual usage to avoid waste.

Reserved Instances: Negotiating lower rates for long-term commitments.

Spot Instances: Leveraging spare capacity at reduced rates.

Balancing these strategies maximizes the cost-effectiveness of cloud solutions.

Case Studies of Successful Implementations

Learning from Real-World Successes

Analyzing real-world successful LLM infrastructure implementations offers valuable insights. This section examines effective examples of scalable infrastructures, extracting actionable lessons.

Technical Review of Case Studies

A renowned tech company exemplified success with autoscaling and vector database integration, handling billions of queries seamlessly. Key success factors included:

Dynamic Resource Management: Autoscaling allowed real-time resource adjustments, avoiding overhead costs.

Optimized Retrieval: Employing vector databases decreased data access times, enhancing performance.

Studying such cases aids in understanding practical applications and avoiding typical challenges.

Best Practices and Common Pitfalls

Designing Robust Infrastructure

Embracing best practices and recognizing common pitfalls are essential for building effective LLM infrastructures. This section summarizes these practices and potential pitfalls.

Technical Overview of Best Practices and Pitfalls

Best Practices:

Utilize containers (e.g., Kubernetes) for scalable deployments.

Implement Continuous Integration/Continuous Deployment (CI/CD) for seamless updates.

Regularly monitor resource metrics for optimal efficiency.

Common Pitfalls:

Neglecting peak usage planning can lead to shortages.

Over-provisioning causes financial waste.

Unoptimized database queries increase delay times.

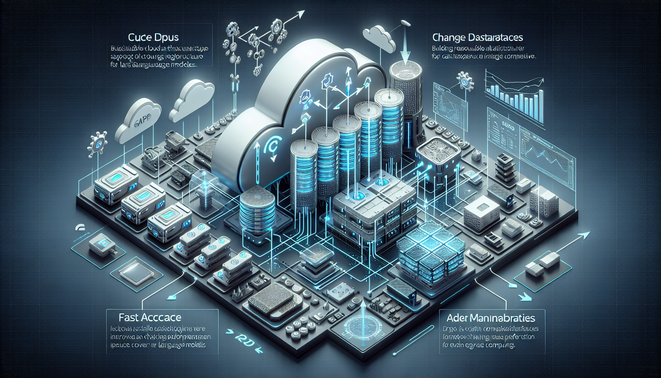

Visual Aid Suggestions

Scalable Architecture Diagram: Show a visual of cloud components used in LLM hosting and inference enhancement.

Autoscaling Flowchart: Visualize decisions based on varying traffic patterns.

Key Takeaways

Scalable infrastructure is essential for LLM hosting, requiring knowledge of computing, storage, and network components.

Diverse hosting solutions present unique benefits and must be carefully assessed.

Effective resource allocation strategies, including autoscaling, are vital for cost and performance benefits.

Vector databases significantly enhance LLM performance by optimizing data retrieval.

Latency reductions focus on mitigating network, processing, and data retrieval delays.

Cost management, through strategic resource purchasing, ensures budget efficiency.

Successful implementations offer insights into best practices and pitfalls to avoid.

Glossary

Large Language Model (LLM): AI model catering to natural language text comprehension and generation.

Vector Database: Special database handling high-dimensional vector storage and retrieval, integral to AI tasks.

Autoscaling: Cloud feature that modifies resource count based on real-time demand.

Latency: Time delay from request issuance to system response.

Inference: Model execution on new data for generating predictions.

Knowledge Check

What is meant by resource allocation in cloud hosting?

A) Distributing tasks evenly across servers

B) Adjusting resources like CPU and memory based on demand

C) Storing data efficiently in the cloud

D) None of the above

Explain how vector databases improve performance in language model inference.

Short Answer: Vector databases enhance performance by managing high-dimensional data retrieval effectively, speeding up inference.

Further Reading

Scaling Large Language Models in the Cloud

Scalable Infrastructure for Large Language Models

Building Scalable ML Models on Google Cloud