Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

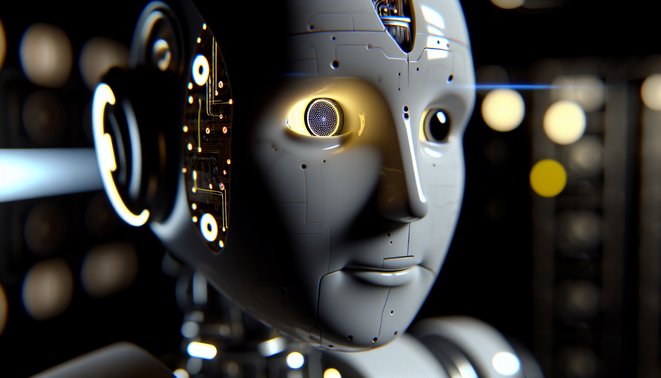

Cache-to-Cache (C2C) introduces a groundbreaking method where large language models (LLMs) communicate directly via their KV-Cache rather than exchanging textual tokens. This new paradigm, developed by top AI research institutions, significantly enhances AI efficiency by reducing communication overhead and preserving privacy. By allowing models to share semantic information at the cache level, C2C could reshape AI collaboration, making it faster and more scalable.

For developers and AI system architects, C2C offers a fresh approach to optimizing multi-model interactions without compromising on data security or performance. The potential applications range from privacy-sensitive industries to large-scale AI deployments that demand seamless, secure model-to-model communication. This advancement underscores a pivotal step toward next-generation AI frameworks capable of more intelligent and efficient teamwork.